I recently had a wave of nostalgia which led to my purchase of an old Pentium Compaq Armada 1592DT Notebook, (and also building an AMD K6-2 gaming PC, complete with a 3dfx Voodoo 2!) Continuing this journey, I decided to revisit a small part of my childhood – programming with QBasic on DOS.

My first steps in programming as a child were with QBasic on an older 486 system that only had DOS 6.22 and Windows 3.1. A group of friends and I discovered QBasic (probably via a bunch of old paper manuals that came with our parent’s PCs) and began writing primitive programs that would prompt for input, save the answer in variables and lead the user through a series of questions, printing out answers in return.

For fun, I decided to look into the effort required to write a simple screen saver (remember those) using QBasic. It turns out there is not much effort required at all.

QBasic on a Modern System

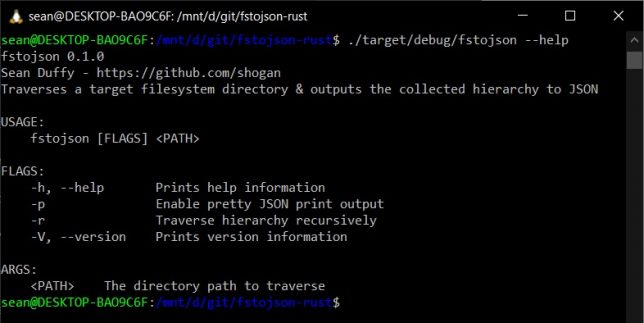

The easiest approach is to use DOSBox. I installed it on my Windows 10 machine. From there, I configured it using the DOSBox configuration file to run in a larger sized window. It is as simple as adding this to the .conf file:

windowresolution=1920x1080 output=ddraw

Next, I setup automatic mounting of a “DOS Apps” folder I setup on my D: drive. Locate the [autoexec] section in the DOSBox conf file and add something like this:

mount C D:\Tools\DOS_APPS C: cd QB45 qb.exe

This will mount everything under D:\Tools\DOS_APPS to the C:\ drive of your DOSBox machine. It then changes directory to the contained QB45 directory to run the QBasic executable.

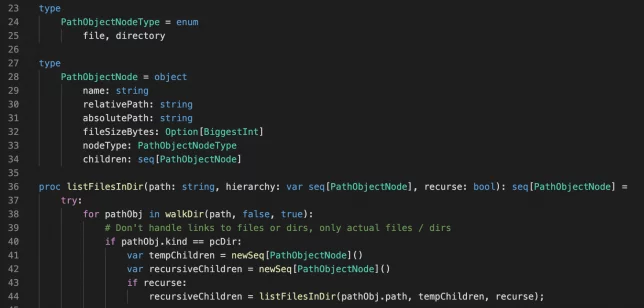

Ripples, The Retro QBasic Screensaver

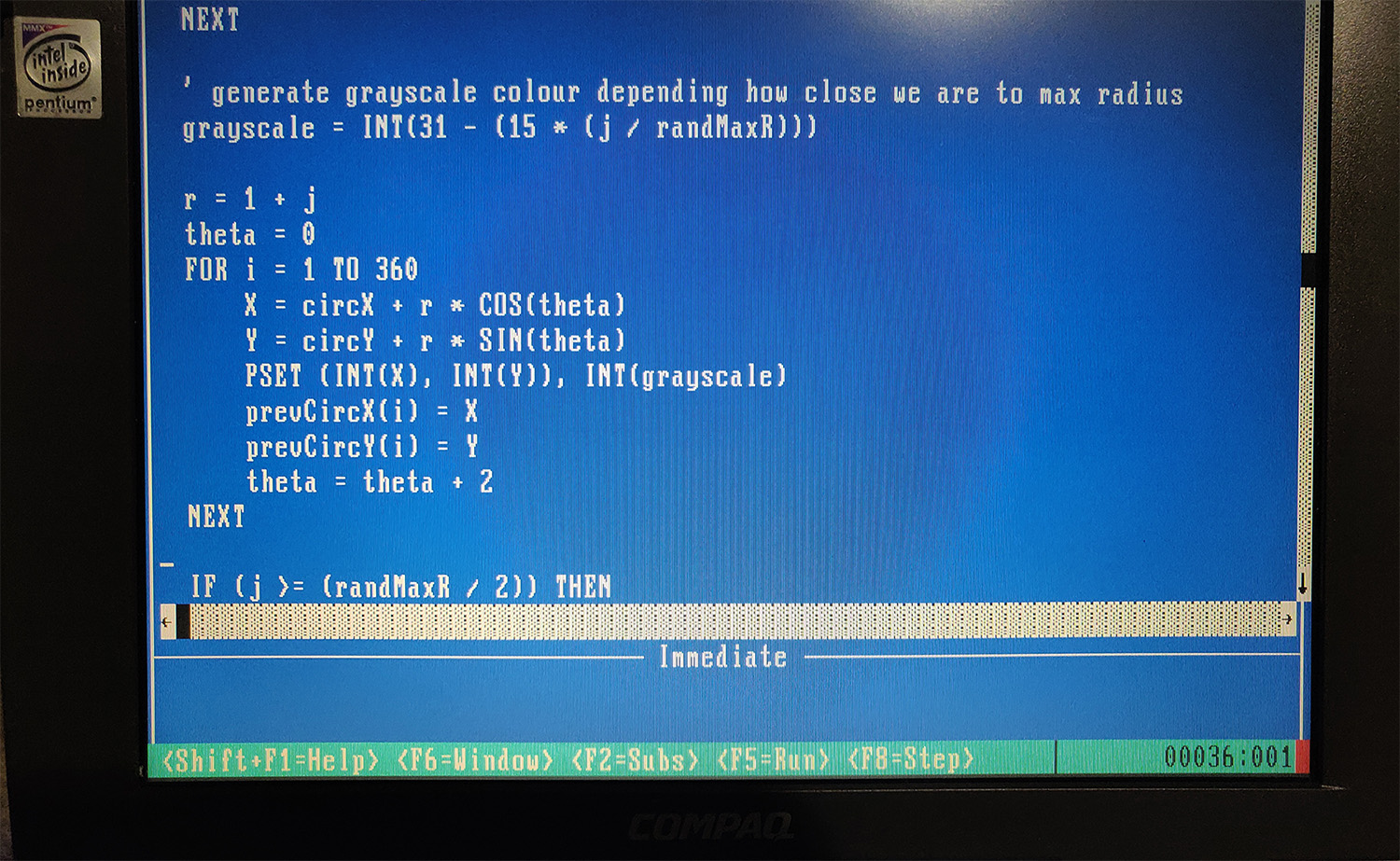

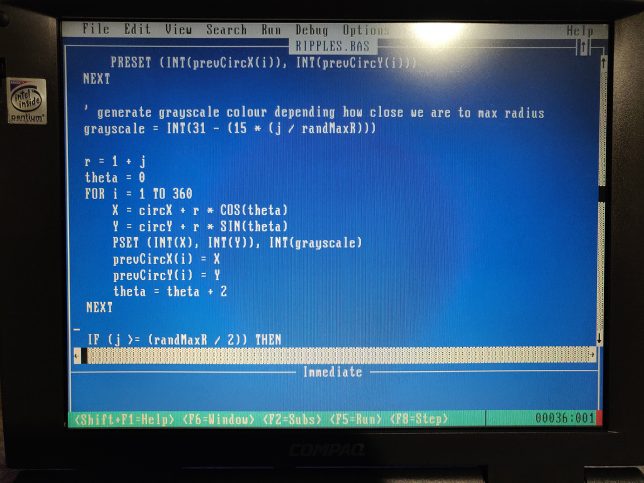

I wrote a simple SCREEN 13 mode (320×200 VGA) screensaver that I named Ripples. It simply draws outer and inner circles which expand and fade out at random locations. I’ve used a basic circle drawing algorithm, and POKE to set values at specific locations in memory. (In SCREEN 13 mode, each pixel is represented by 1 byte).

The reason I didn’t use the much simpler CIRCLE routine, is because I wanted to have multiple circles drawn out (inner loops) and also prevent screen clears after every concentric circle. (Also, the added complexity of the circle creation routine itself was fun to add).

You can take a look at the quickly hacked together source code here. I’m sure there are far more efficient ways of doing some of these things, but I didn’t want to spend too long digging deep into screen drawing. (Maybe using DATA could improve things, or perhaps even writing inline machine code to perform the loops and pixel setting that POKE does).

Running it on an actual Retro System

I copied QBasic and the source code across to my actual retro system (the old Pentium laptop).

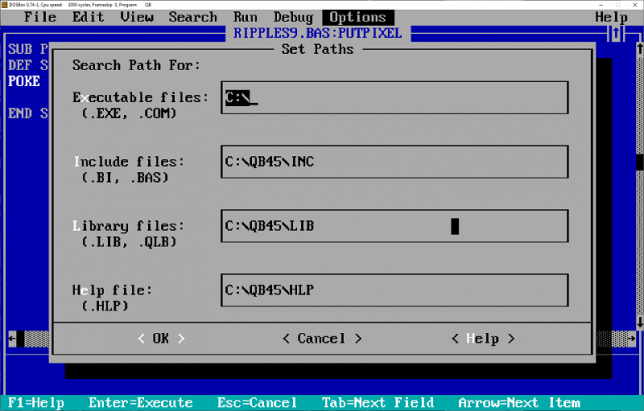

Next I configured QBasic to be able to use a linker to compile an executable. It’s a case of simply ensuring your Paths are set correctly under Options.

I opened and compiled the source, disabling debug symbols to vastly improve speed too. Windows 98 has a DOS mode built-in that can execute DOS applications directly from Windows. QBasic and the program ran perfectly like this. After booting into MS-DOS mode I confirmed that the compiled source ran perfectly there too.

This was a mostly pointless exercise, other than to experience a bit of nostalgia. It certainly was a blast from the past. The experience also helps me to appreciate the modern tooling and IDEs we use today. Having said that though, I did find myself quickly iterating through edits in QBasic using the edit and search options. It wasn’t too bad after all.