Lately my family and I have been playing a lot of the game Kingdom Two Crowns. It’s a really fun and pretty relaxing game to play together. Two players, split screen, helping eachother expand and defend. We play together on the Playstation, but I also have a copy on PC. Realising the game is developed in Unity, I thought I would have a go at modding the game just for fun and jokes. I ended up using a Hex editor to mod the game by replacing raw bytes in the game’s main resources.resource file (assets storage).

Mods for Kingdom Two Crowns

Before doing this, I poked around online looking for other mods, and found some that are done using some form of injection that runs during the game’s start up. This is cool. But I wanted to find my own method that could be applied to other games too.

Poking around the assets

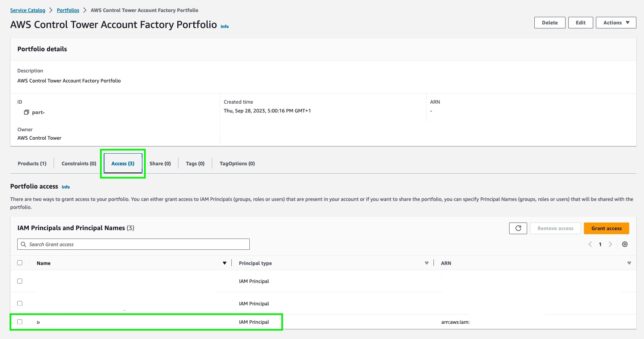

I started out by downloading a Unity UABE Avalonia. A ‘Cross-platform Asset Bundle/Serialized File reader and writer’ for Unity.

I didn’t find it useful for writing back to the Unity asset bundle files for Kingdom Two Crowns, but I did find it useful for gathering metadata about the files in the game I was interesting in modding.

Using the main interface I opened the game’s resources.assets file (in the game’s data directory).

From there, I browsed the list of game assets till I found some of the music I wanted to replace in the Norselands DLC part of the game. This was easy since I looked up the game’s soundtrack to locate the specific track I was interested in replacing. From there I searched the asset list for the name of the track. Horsin’ Around.

Expanding the StreamedResource (m_Resource) object within the AudioClip component reference shows the source location (resources.resouce), as well as the critical bits of information we’ll need, the ‘byte offset’ location in that resource file, as well as the size (also in bytes) of the asset.

Next, I opened the Plugins windo for the Horsin’ Around AudioClip component and used that to extract the audio file (a .ogg audio file).

Modding the game with a Hex Editor

So next up was to edit the .ogg audio file and replace it with my own sound/music content. A verbal rendition of the track’s flute in my own voice. Something I thought would be funny for my family to suddenly encounter while playing when the track started up during a game.

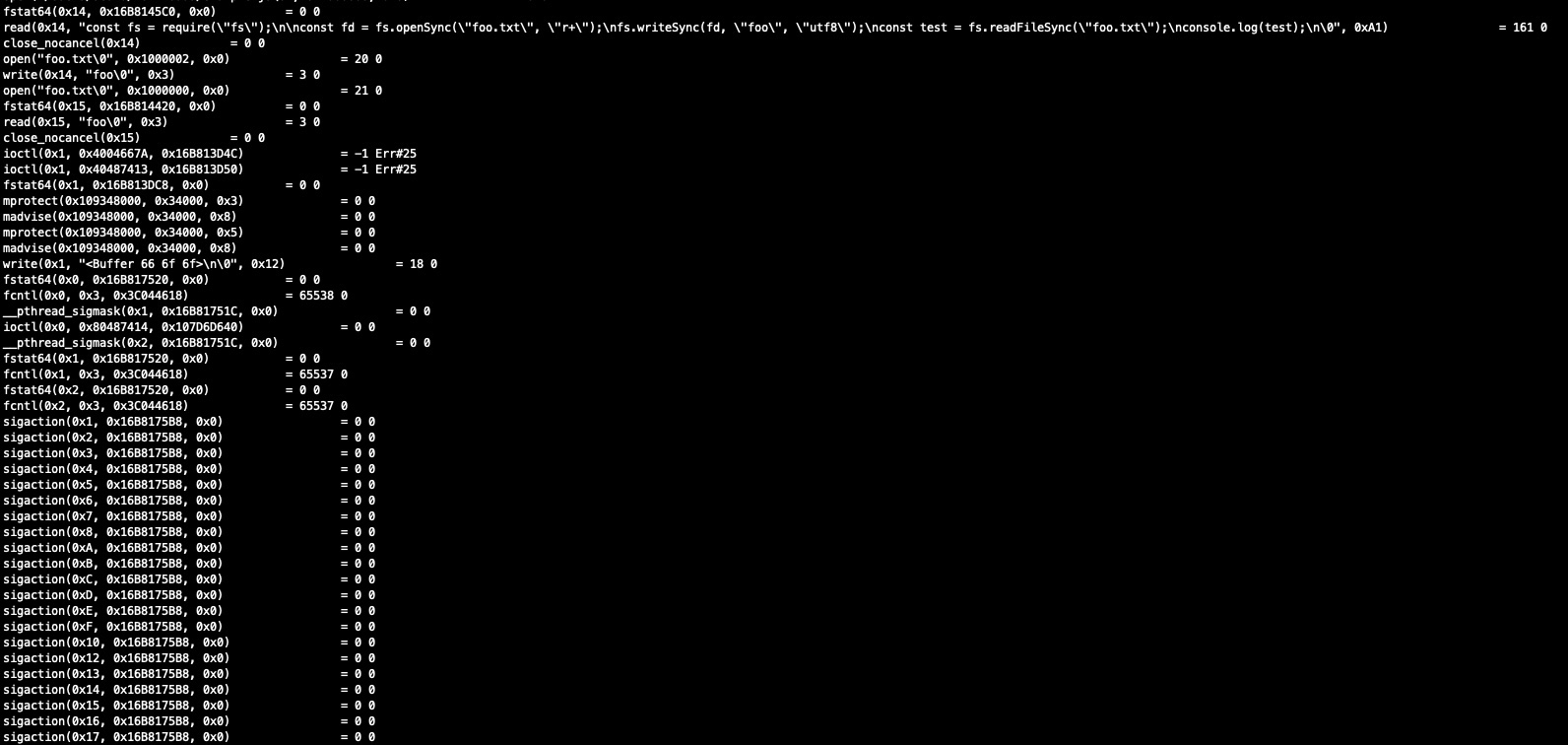

I opened the resources.resource file (around 800MB in size) using HxD – a hex editor.

Using the metadata about the asset’s location in the file, I used the ‘Go to‘ function to go to the byte offset (using decimal) of 528703872.

Here is what the first number of bytes starting at that location look like:

Next, I opened the .ogg audio file I extracted from the asset of the Horsin’ Around track in the hex editor.

That’s a clear difference. The header’s of each asset look different. One indicates the asset resource is Ogg Vorbis (that much is obvious since I extracted the file in .ogg audio format), and the original directly in the main resources.resource file is showing a header value of ‘FSB5‘ which I was not familiar with.

Already I anticipate directly replacing my modified Ogg Vorbis file content over this FSB5 content may cause problems and the Unity game engine may not properly load and play it, and even more likely would probably just crash.

Figuring out the correct file format

So I started to look into what FSB5 is. It turns out it is a FMOD Sample Bank file format. Makes sense now since FMOD is commonly used for game audio.

I would need to convert my custom .ogg file into this same FSB5 file format somehow. I started out getting a trial/hobbyist version of FMOD Studio, but could not find any easy or simple way of changing my .ogg file into a FSB5 file.

Next I found a CLI tool on github in this Bloodborne-sound-repacking repository that can be used to convert ogg vorbis tracks into FSB5 tracks. In the Fmod directory off the main branch, is the CLI tool fsbankcl.exe.

I edited my own version of the Horsin’ Around .ogg audio file, replacing content with my own voice. Here, I was careful to keep the size of the file smaller than or at least equal to the exact byte size of the original track (6760256 bytes) – so around 6MB. I used Audacity for this purpose, and kept quality at highest to prevent any compression.

Next was to run the fsbankcl.exe with the source of my edited .ogg file, an output location to write the new file to, and the format argument of ‘vorbis’.

.\fsbankcl.exe "C:\Users\Sean\Downloads\03 - Horsin' Around modded 2.ogg" -o "C:\Users\Sean\Downloads\03 - Horsin' Around modified v2.fmod" -format vorbis

Loading the modified file in HxD now showed the header starting with what I wanted ‘FSB5’. Excellent.

Replacing the original resource file content with modified content

Now, back to the resources.resource file in HxD, and at the correct starting byte offset, I chose the Edit -> Select Block option. This highlights all the content of the actual track itself in the game’s resource file. I needed to use the byte length from the asset’s metadata of 6760256. It’s important that the Start-offset was correct for the starting point of the audio, and length was set to the byte length of the resource. Using decimal (dec) of course.

I switched back to the modified and FSB5 converted audio in HxD and did a select all (Ctrl + A) and copy (Ctrl + C).

I switched back to the selected bytes in the resources.resource file and used the Paste write (Ctrl + B) option to replace all the bytes with my modified bytes.

Lastly, I saved the resource.resource file in HxD, replacing the game’s original with this modified version and started the game up.

No crashes, and the game loaded a new save into the Norselands DLC pack without issue.

After a while, the Horsin’ Around track started playing. Sure enough my modified audio was what came out of the speakers.

Conclusion

This is a valid way of modding games. Sometimes its necessary to go down to modifying raw bytes to change a game. Not all games are made with modding in mind, nor do they all expose easy interfaces or integration points.

This method I’ve used isn’t specific to Kingdom Two Crowns either. Many Unity games will be able to be modified using this method since they all use the same sort of resource and streaming asset storage system.