ZFS includes some great features that help deliver lightning fast read speeds for storage. Two such features are Adapative Replacement Cache (ARC), and a second possible cache tier, L2ARC. Together these features can dramatically decrease the number of reads required from magnetic storage.

Usually ZFS will assign all but 1GB of system memory to ARC. When files are retrieved they are cached in system RAM dedicated to ARC. This allows them to be retrieved very quickly the next time they are read.

A second tier of cache named L2ARC is also possible. This is where you can dedicate solid state disks to act as another tier of cache. L2ARC only really makes sense when you are fronting slower magnetic spinning disks with cache, and you won’t have enough RAM to support a large enough ARC either. L2ARC doesn’t make a lot of sense if you are already running storage pools across solid state drives.

Another point to consider is that if your requirements mandate a large ARC size, system memory will start to get very expensive. If you are using magnetic storage tiers, then using SSD for L2ARC becomes a much cheaper option than simply dumping piles of money into more memory.

Analyzing ZFS ARC hit rates for Web Traffic to this Blog

I’ve been running this blog on my personal Kubernetes Raspberry Pi Cluster, which has its storage provisioned via NFS from my FreeNAS storage server.

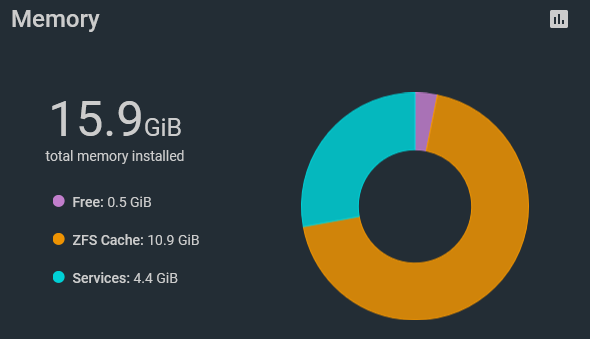

The storage and cache breakdown is:

- 4 x 3TB SATA spinning disks, RAIDZ1

- 2 x 250GB SSD SATA disks, ZFS Mirror

- 16GB RAM, around 4GB used for jails and VMs, the remaining for ARC

- No L2ARC. NFS storage is provided from the SSD based storage pool

This blog has a bunch of static files along with it’s WordPress installation, plus a MySQL database. Both of these sets of files are provisioned from NFS on the ZFS mirror storage pool, utilizing Kubernetes PVs.

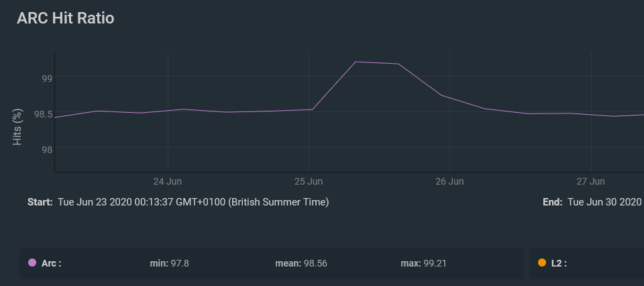

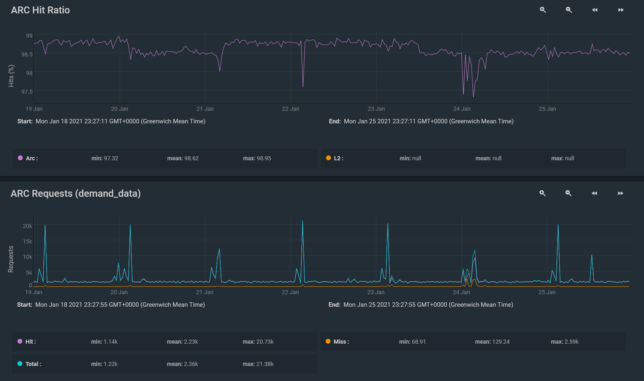

The SSD storage is already quite fast, but the cache features of ZFS really help here when serving frequent random IO generated from web traffic. Just take a look at these two charts:

Instead of constantly reading from SSDs and causing unecessary wear, web traffic file requests are mostly served from ARC.

From these two graphs, I can tell that right now 16GB of system memory is perfectly fine for my home storage and web traffic serving needs. ARC is handling these workloads perfectly, with a very high cache hit ratio too.

Secondly, I certainly don’t need L2ARC. Web content is already sitting on SSD storage. Additionally, even if files were on magnetic storage, the main ARC would still be able to serve almost all web traffic.

Conclusion

ZFS ARC impresses all around. On it’s busiest day of web traffic last year, this blog saw 12000 sessions, and around 13500 page views in just a few hours. ZFS ARC happily served the site’s storage needs from system memory.

In fact, the ARC hit ratio actually increased to just over 99% at this point in time!