I’m constantly searching for minimal cost web hosting solutions. To clarify that statement, I mean ‘dynamic‘ websites, not static. At the moment I am running this blog and a bunch of others on a Raspberry Pi Kubernetes cluster at home. I got to thinking though, what happens if I need to move? I’ll have an inevitable period of downtime. Clearly self-hosting from home has it’s drawbacks.

I’ve run my personal dynamic websites from AWS before (EC2 with a single Docker instance), but used an application load balancer (ALB) to help with routing traffic to different hostnames. The load balancer itself adds a large chunk of cost, and storage was EBS, a little more difficult to manage when automating host provisioning.

A Minimal Cost Web Hosting Infrastructure in AWS

I wanted to find something that minimises costs in AWS. My goal was to go as cheap as possible. I’ve arrived at the following solution, which saves on costs for networking, compute, and storage.

- AWS spot EC2 single instance running on AWS Graviton2 (ARM).

- EFS storage for persistence (a requirement is that containers have persistence, as I use wordpress and require MySQL etc…)

- Elastic IP address

- Simple Lambda Function that manages auto-attachment of a static, Elastic IP (EIP) to the single EC2 instance. (In case the spot instance is terminated due to demand/price changes for example).

- Traefik v2 for reverse proxying of traffic hitting the single EC2 instance to containers. This allows for multiple websites / hosts on a single machine

It isn’t going to win any high availability awards, but I’m OK with that for my own self-hosted applications and sites.

One important requirement with this solution is the ability to run dynamic sites. I know I could be doing this all a lot easier with S3/CloudFront if I were to only be hosting static sites.

Using this setup also allows me to easily move workloads between my home Kubernetes cluster and the cloud. This is because the docker images and tags I am using are now compatible between ARM (on Raspberry Pi) and ARM on Graviton2 AWS docker instances.

The choices I have gone with allow me to avoid ‘cloud lock in’, as I can easily switch between the two setups if needed.

Cost Breakdown

I’ve worked out the monthly costs to be roughly as follows:

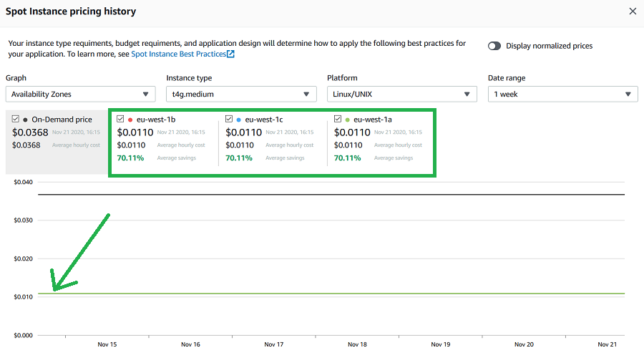

- EC2 Graviton2 ARM based instance (t4g.medium), $7.92

- 3GB EFS Standard Storage, $0.99

- Lambda – will only invoke when an EC2 instance change occurs, so cost not even worth calculating

- EIP – free, as it will remain attached to the EC2 instance at all times

If you don’t need 4GB of RAM, you can drop down to a t4g.small instance type for half the cost.

Total monthly running costs should be around $8.91.

Keep in mind that this solution will provide multiple hostname support (multiple domains/sites hosted on the same system), storage persistence, and a pretty quick and responsive ARM based Graviton2 processor.

You will need to use ARM compatible Docker images, but there are plenty out there for all the standard software like MySQL, WordPress, Adminer, etc…

How it Works

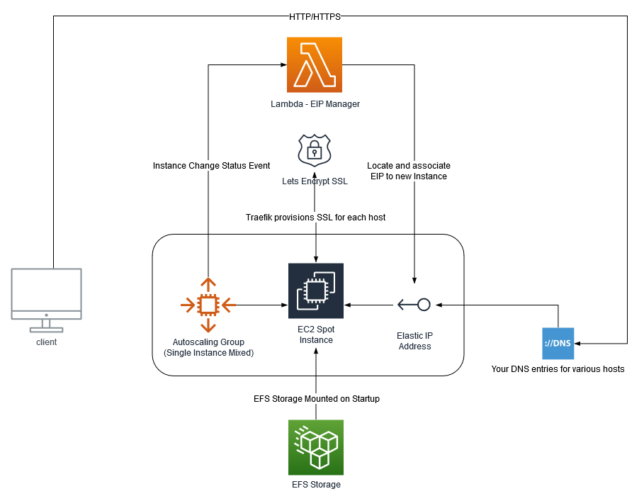

The infrastructure diagram above pretty much explains how everything fits together. But at a high level:

- An Autoscaling Group is created, in mixed mode, but only allows a single, spot instance. This EC2 instance uses a standard Amazon Linux 2 ARM based AMI (machine image).

- When the new instance is created, a Lambda function (subscribed to EC2 lifecycle events) is invoked, locates a designated Elastic IP (EIP), and associates it with the new spot EC2 instance.

- The EC2 machine mounts the EFS storage on startup, and bootstraps itself with software requirements, a base Traefik configuration, as well as your custom ‘dynamic’ Traefik configuration that you specify. It then launches the Traefik container.

- You point your various A records in DNS to the public IP address of the EIP.

- Now it doesn’t matter if your EC2 spot instance is terminated, you’ll always have the same IP address, and the same EFS storage mounted when the new one starts up.

- There is the question of ‘what if the spot market goes haywire?’ By default the spot price will be allowed to go all the way up to the on-demand price. This means you could potentially pay more for the EC2 instance, but it is not likely. If it did happen, you could change the instance configuration or choose another instance type.

Deploying the Solution

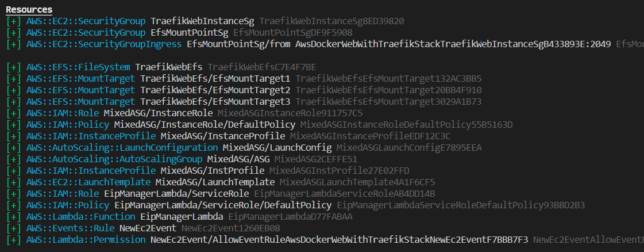

As this is an AWS opinionated infrastructure choice, I’ve packaged everything into an AWS Cloud Development Kit (AWS CDK) app. AWS CDK is an open source software development framework that allows you to do infrastructure-as-code. I’ve used Typescript as my language of choice.

Deploy Requirements

You’ll need the following requirements on your local machine to deploy this for yourself:

- NodeJS installed, along with npm.

- AWS CDK installed globally (

npm install -g aws-cdk) - Define your own traefik_dynamic.toml configuration, and host it somewhere where the EC2 instance will be able to grab it with curl. Note, that the Traefik dashboard basic auth password is defined using htpasswd.

- An example traefik_dynamic.toml file can be seen here

- You must generate the password with this tool. E.g.

htpasswd -nb YourUsername YourSuperSecurePasswordGoesHere

- An existing VPC in your account to use. The CDK app does not create a VPC (additional cost). You can definitely use your default account VPC that is already available in all accounts though.

- An existing AWS Keypair

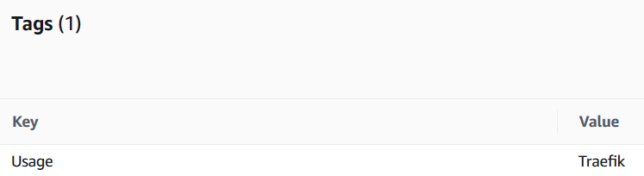

- An existing Elastic IP address (EIP) created, and tagged with the key/value of Usage:Traefik (this is for the Lambda function to identify the right EIP to associate to the EC2 instance when it starts)

I haven’t set up the CDK app to pass in parameters, so you’ll just need to modify a bunch of variables at the top of aws-docker-web-with-traefik-stack.ts to substitute your specific values for the aforementioned items. For example:

const vpcId = "your-vpc-id"; const instanceType = "t4g.medium"; // t4g.small for even more cost saving const keypairName = "your-existing-keypair-name"; const managementLocationCidr = "1.1.1.1/32"; // your home / management network address that SSH access will be allowed from. Change this! const traefikDynContentUrl = "https://gist.githubusercontent.com/Shogan/f96a5a20183e672f9c49f278ea67503b/raw/351c52b7f2bacbf7b8dae65404b61ff4e4313d81/example-traefik-dynamic.toml"; // this should point to your own dynamic traefik config in toml format. const emailForLetsEncryptAcmeResolver = 'email = "youremail@example.com"'; // update this to your own email address for lets encrypt certs const efsAutomaticBackups = false; // set to true to enable automatic backups for EFS

Build and Deploy

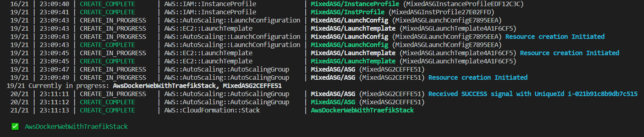

Build the Typescript project using npm run build. This compiles the CDK and the EIP Manager Lambda function typescript.

At this point you’re ready to deploy with CDK.

If you have not used CDK before, all you really need to know is that it takes the infrastructure described by the code (typescript in this case), and coverts it to CloudFormation language. The cdk deploy command deploys the stack (which is the collection of AWS resources defined in code).

Run:

# Check what changes will be made first cdk diff # Deploy cdk deploy

Testing a Sample Application Stack

Here is a sample docker-compose stack that will install MySQL, Adminer, and a simple WordPress setup.

SSH onto the EC2 instance that is provisioned, and use docker-compose up -d deploy the compose example stack. Just remember to edit and change the template passwords in the two environment variables.

You’ll also need to update the hostnames to your own (from .example.com), and point those A records to your Elastic public IP address.

One more thing, there is a trick to running docker-compose on ARM systems. I personally prefer to grab a docker image that contains a pre-built docker-compose binary, and shell script that ties it together with the docker-compose command. Here are the steps if you need them (run on the EC2 instance that you SSH onto):

sudo curl -L --fail https://raw.githubusercontent.com/linuxserver/docker-docker-compose/master/run.sh -o /usr/local/bin/docker-compose sudo chmod +x /usr/local/bin/docker-compose

For your own peace of mind, make sure you inspect that githubusercontent run.sh script yourself before downloading, as well as the docker image(s) it references and pulls down to run docker-compose.

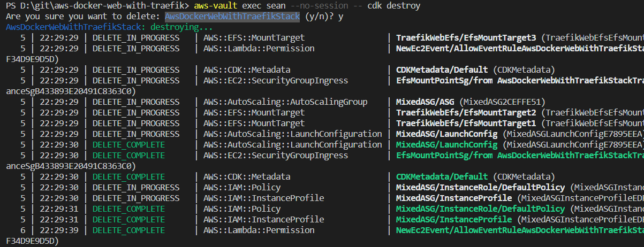

Tear Down

To destroy the stack, simply issue the cdk destroy command. The EFS storage is marked by default with a retain policy, so it will not be deleted automatically.

cdk destroy AwsDockerWebWithTraefikStack

Closing

If you’re on the look out for a minimal cost web hosting solution, then give this a try.

The stack uses the new Graviton2 based t4g instance type (ARM) to help achieve a minimal cost web hosting setup. Remember to find compatible ARM docker images for your applications before you go all in with something like this.

The t4g instance family is also a ‘burstable’ type. This means you’ll get great performance as long as you don’t use up your burst credits. Performance will slow right down if that happens. Keep an eye on your burst credit balance with CloudWatch. For 99% of use cases you’ll likely be just fine though.

Also remember that you don’t need to stick to AWS. You could bolt together services from any other cloud provider to do something similiar, most likely at a similar cost too.