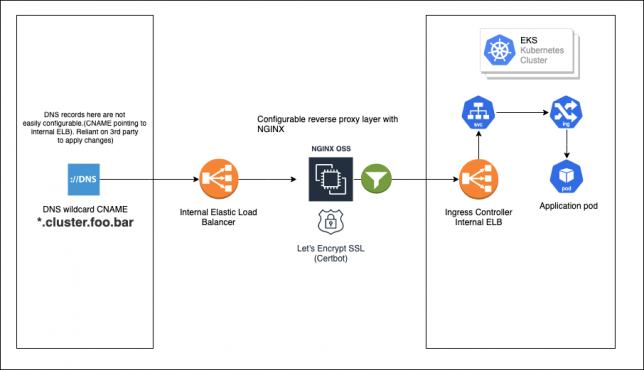

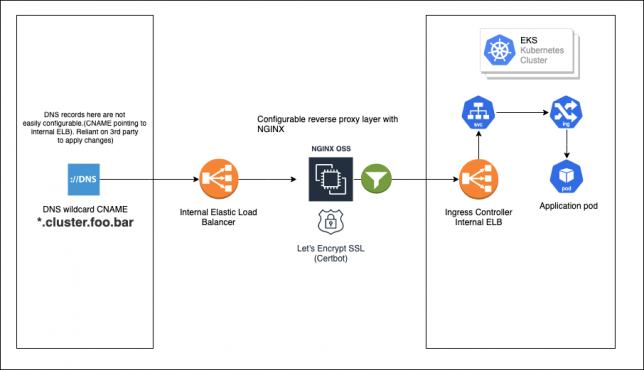

This is a pattern I’ve used with success for access to apps running in a number of Kubernetes clusters that were restricted to only having a single kubernetes ingress load balancer.

The Scenario

- Kubernetes clusters (EKS) are on the internal network only (in this case private subnets in an AWS VPC).

- IAM permissions are locked down to prevent creation of security groups (we can only use existing, pre-defined security groups) and so the LoadBalancer service type of Kubernetes is off-limits, as the k8s control plane needs to be able to create these automatically with security groups – this operation fails because of the restricted IAM permissions on the cluster. We have one Elastic Load Balancer created with the LoadBalancer service type when the cluster was initial bootstrapped with an nginx ingress controller + service type == LoadBalancer before the permissions were locked down again.

- The Ingress Controller that is running is backed by an internal facing Elastic Load Balancer (ELB), created initially as described above.

- Applications run across namespaces in each cluster, and the Ingress Controller must be able to provide dynamic access for users of these internal applications that sit on the network outside the k8s cluster.

- DNS and ingress must be dynamic enough to allow the same apps to run in different namespaces, use the same URL path, but with differing hostnames. SSL must also be provided for all of these apps using a wildcard SSL certificate. E.g.

- namespace1.cluster.foo.bar/app1

- namespace2.cluster.foo.bar/app1

- namespace3.cluster.foo.bar/app1

- namespace1.cluster.foo.bar/app2

- namespace2.cluster.foo.bar/app2

- namespace3.cluster.foo.bar/app2

- Once DNS wildcard CNAME record is created, it is difficult to change to point to a new location if needing changes (reliant on 3rd party to manage DNS).

A Solution with Reverse Proxying

There are of course a number of ways to approach this, like running under cert-manager inside the cluster with the letsencrypt issuer, or if you are running your own PKI with vault, the vault issuer.

cert-manager wouldn’t work well here as services are not publicly accessible for HTTP-01 certificate verification.

It could also be possible to terminate SSL at the ingress controller level in the cluster with the SSL certificate loaded there.

One additional requirement that I didn’t mention above though was that developers who are pushing their apps into the clusters need to be able to ‘dynamically’ configure their own personal ‘dev’ namespaces / ingress rules.

They configure their ingress easily enough with the Kubernetes Ingress resource when they deploy their apps (using Helm), however hostnames are not so easy for them to configure. Route53 is not in use here, and not allowed in this environment, and programmatic access to DNS is not possible.

A reverse proxy with NGINX

This layer exists more or less just to allow easy re-pointing of CNANE wildcard DNS entry to the Kubernetes cluster. As DNS is not easily configured (handled by another team/resource), we can simply leave it pointed to the NGINX elastic load balancer, and then just re-point requests using NGINX configuration if we need to.

It’s worth pointing out that this NGINX layer could be hosted on a multitude of places, including as a containerised solution, or it could even be replaced by a lambda function with API Gateway that could do the reverse proxying instead.

Environments are designated by namespaces in each ‘class’ of cluster. For example a non-production EKS cluster will have namespaces for non-production environments.

Hostnames need to be used to help the ingress rules match correctly with designated paths.

I configured an internal load balancer and setup a fleet of NGINX instances behind it.

Here is a quick runbook of how to setup NGINX and certbot on a vanilla Amazon Linux 2 EC2 instance. Use whichever automation you prefer such as baking your own AMI with packer, using Terraform, or ansible, but the runbook of steps to install NGINX and certbot is effectively:

# nginx

sudo amazon-linux-extras install nginx1.12

sudo systemctl enable nginx

sudo systemctl start nginx

# certbot

sudo wget -r --no-parent -A 'epel-release-*.rpm' https://dl.fedoraproject.org/pub/epel/7/x86_64/Packages/e/

sudo rpm -Uvh dl.fedoraproject.org/pub/epel/7/x86_64/Packages/e/epel-release-*.rpm

sudo yum-config-manager --enable epel*

yum repolist all

yum install -y certbot

# request / generate letsencrypt wildcard cert using dns challenge interactively

certbot -d *.your.domain.here --manual --preferred-challenges dns certonly

# Interactive command above, choose to omit this in automation and do manually if you're using DNS-01 like I am here - certbot will give you a dynamically generated TXT record value for DNS-01 that you'll need to create.

systemctl restart nginx

Once NGINX is installed and your certs are generated, you’ll need to configure /etc/nginx/nginx.conf to point to the correct certificate files.

A wildcard CNAME record is created once-off that points anyhost.cluster.foo.bar to the internal ELB hostname for the reverse proxy NGINX instances (these sit outside of the cluster as standard EC2 hosts for now). For example:

[CNAME] *.cluster.foo.bar -> internal-nginx-reverse-proxy-fleet-xxxx-xxxx.us-east-2.elb.amazonaws.com

I used certbot (letsencrypt) to issue a wildcard SSL certificate for the NGINX fleet servers for *.cluster.foo.bar. DNS-01 challenge type was used, as everything here is in a private, internal network, not accessible by letsencrypt services.

A TXT record just needs to be created with your DNS to verify to letsencrypt that you own the domain in question.

In the NGINX configuration, the generated certificate is loaded up for port 443 and the following location rule is setup to proxy_pass the requests sent to the NGINX fleet back to the Kubernetes Ingress Controller ELB.

location / {

proxy_set_header Host $host;

proxy_pass http://internal-ingress-controller-xxxxx.us-east-2.elb.amazonaws.com;

}

The proxy_set_header directive is important, as it adds the host header that the NGINX fleet instance receives from the client, and sends it with the proxied request back to the Kubernetes ingress controller. The ingress rules need to match both hostname AND path in the requests to find the correct service inside the cluster/namespace.

SSL is now effectively terminated at the NGINX fleet layer with a wildcard SSL certificate and services inside the cluster don’t need to worry about configuring their own individual SSL certificates.

Ingress Rule Configuration

Now, developers can deploy their apps, and customise their ingress rules to use both hostname and path to setup access for their apps running in the cluster(s).

For example:

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

annotations:

kubernetes.io/ingress.class: nginx

labels:

app: some-app

name: some-app

namespace: namespace1

spec:

rules:

- host: namespace1.cluster.foo.bar

http:

paths:

- backend:

serviceName: app1

servicePort: 8083

path: /app1

}

There are definitely other ways of doing this. Cleaner possibly, more automated in some ways, however with the constraints in play here (internal EKS, private only networks, no public internet access into the cluster), I think this is a good solution that makes life fairly pleasant for the developers that need to deploy their apps to these Kubernetes clusters.