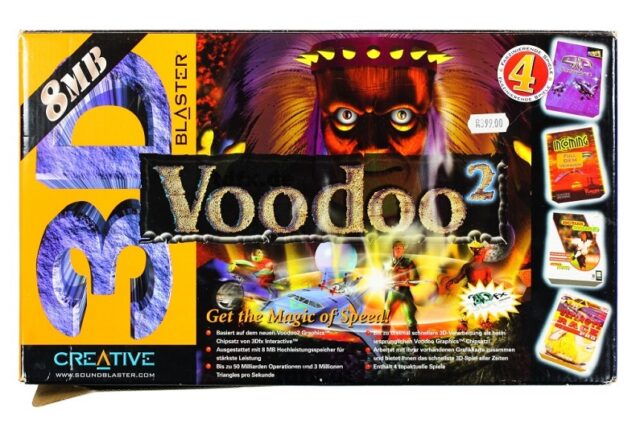

In the late ninetees, as a teenager I had the priveledge of owning a second hand Creative Labs 3dfx Voodoo 2 graphics card. It was an upgrade for my own hand-built PC – an AMD K6-2 333mhz system I had painstakingly saved up for and cobbled together.

Nostalgia running high, I recently set about building up a ‘retro’ gaming PC. With roughly the same specification as my original K6-2 machine from the late ninetees, it also features an original 3dfx Voodoo 2 accelerator card.

The Brief Story of 3dfx Interactive and the Voodoo

A group of colleagues originally working together at SGI decided to break out and create 3dfx Interactive in San Jose, California (1994). This was after an initial failed attempt at selling rather pricey IrisVision boards for PCs.

Their original plan was to create specialist hardware solutions for arcade games, but they changed direction to instead design PC add-on boards. The reason for their pivot came about as a result of a few favourable factors. Namely:

- The cost of RAM was low enough to make this a feasible endeavour.

- RAM latency was much improved and allowed for ‘high’ clock speeds of up to 50MHz.

- 3D games were picking up in popularity. Spearheaded initially no doubt by id software with games such as Wolfenstein 3D, DOOM, the market was clearly headed to a point where 3D accelerated graphics would be in demand.

The first design, the SST1 (marketed as the Voodoo 1), targeted the $300-$400 price range for consumer PCs. A variety of OEMs picked up the design and released their own reference design boards, leading to the success of the original Voodoo line-up of 3D accelerator cards.

Enter the Voodoo 2

After the initial success of the Voodoo 1, the 3dfx Voodoo 2 (SST2) was soon born. It had vastly improved specifications over the original SST1 design such as:

- 100MHz clock rated EDO RAM (running at 90 MHz, 25 ns)

- 90MHz ASICs

- 2 x Texture Mapping Units (TMUs)

The fillrate of the Voodoo 2 was around 90 MPixels/s, and frame rates in benchmarks showed a massive uplift, nearly doubling in some cases.

My first (original) 3DFX Voodoo 2 PC Build

My first self-built (from scratch) PC was a bit of a frankenstein build. I had carefully budgeted and selected a motherboard that had onboard everything. Graphics, sound and networking. It would also run an AMD K6-2 CPU – a budget friendly option compared to Intel at that time.

The onboard graphics card shared 8MB of memory from system RAM (of which I only had 32MB total). As far as I recall it was capable of running Direct3D enabled games, albeit hobbling along with crippled frame rates.

Upgrading to a 3dfx Accelerator Card

After enduring this ‘fps hardship’ and learning more about the recently successful 3dfx graphics cards (a friend had a 4MB 3dfx Voodoo ‘1’ card), I found out about a second hand option. It was available through a friend of a friend, and would cost me 500.00 South African Rand (ZAR). This was a lot for a teen back then. I had saved up money, and bargained with my parents to combine my funds with a birthday gift contribution in order to purchase this card.

As I recall, I had to make massive concessions in order to fit the graphics card into my machine.

My motherboard only had 2 PCI slots. Both were horizontally in line with the CPU socket. The board design was probably never intended to house cards longer than the average PCI device at the time.

I had to remove the K6-2’s heatsink I was using and replace it with one from an older 486 machine that I had lying around. The profile of the 486 heatsink was much lower, allowing the 3dfx Voodoo 2 card to slot into a PCI slot, extending over the heatsink.

The problem now was that the 3dfx card’s lower PCB edge was now just about in direct contact with the heatsink. Another issue was that the heatsink was not made for mounting to a Socket 7 board. This forced me to lay my computer’s chassis down on it’s side in order for gravity to keep the heatsink in place!

I had a plan to keep pressure on the heatsink to maintain better contact with the CPU. Using a bit of non-conductive material between the 3dfx card’s PCB and the heatsink, I kept things in place. I also had to permanently angle a large fan blowing into the now open case.

This was the price I would pay to have my budget 3dfx voodoo 2 enabled system.

The Retro 3dfx Voodoo 2 PC Build

Recently after a bout of nostalgia and watching LGR videos, I started trawling eBay for old PC parts. The goal was to almost identically match my original PC build.

After a number of weeks of searching, I found the following parts. The motherboard was the most difficult to find (at a good price). Most Super Socket 7 boards I would find were dead and listed as spare parts.

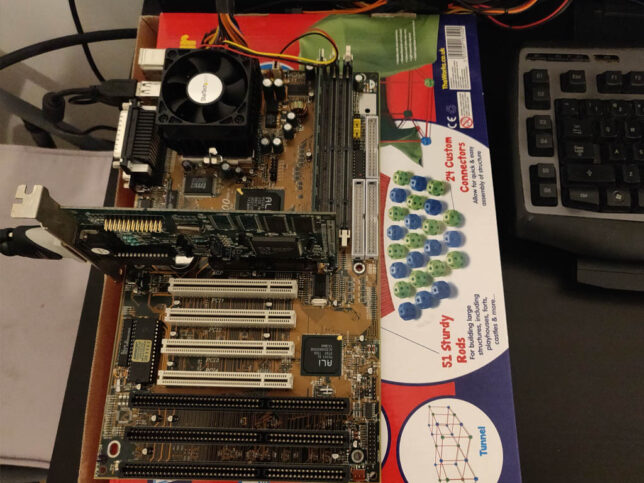

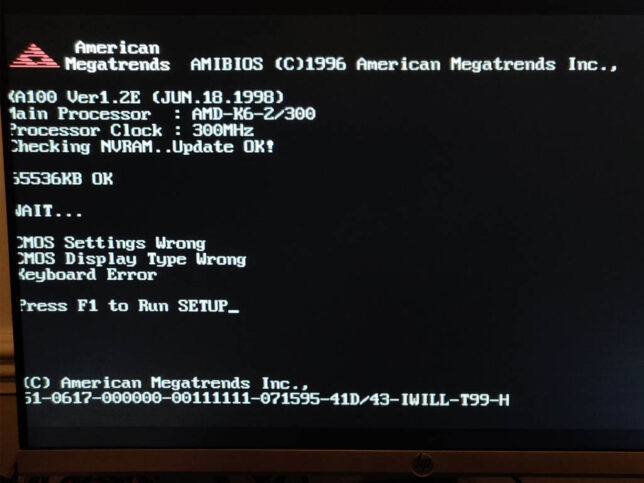

- Iwill XA100 Aladdin V Super7 Motherboard, an AMD K6-2 300 CPU, and 64MB of PC100 SDRAM (168 pin)

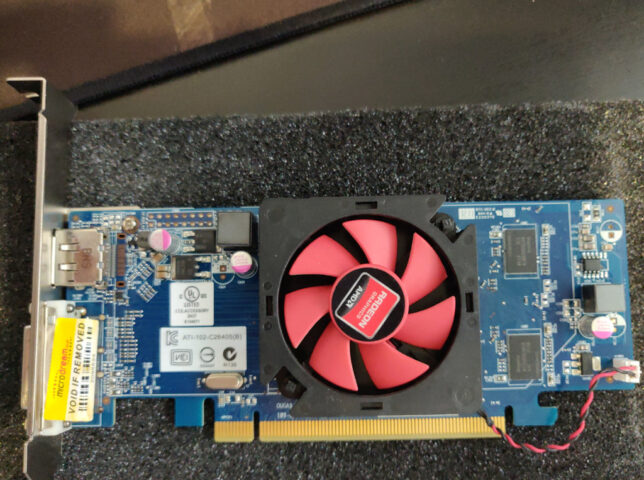

- S3 Trio 3D/2X graphics card (the Voodoo only handled 3D, and uses a loopback cable to plug into a ‘2D’ card)

- 3DFX JoyMedia Apollo 3D fast II 12Mb Video Card PCI Voodoo2 3DFX V2 Rev A1

- A Retro styled, beige ATX PC Chassis, Computer MIDI Tower Case

- Creative Sound Blaster Live 5.1 Digital SB0220 PCI Sound Card

- An 80GB IDE HDD, IDE ribbon cable, and an older DVD-R drive.

- I used a modern 80 plus certified ATX power supply. It has a power connector with an older 20 pin layout which was perfect to re-use.

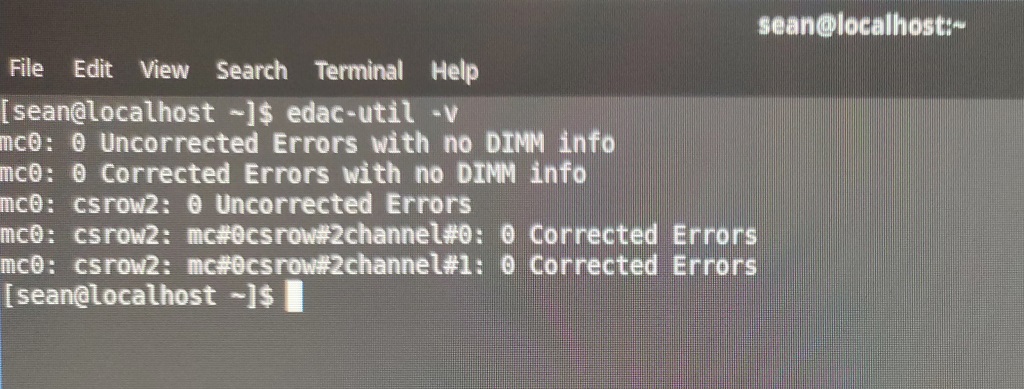

To test the hardware, I powered up the basic components on top of a cardboard box. Generally a good idea and especially so when using older hardware.

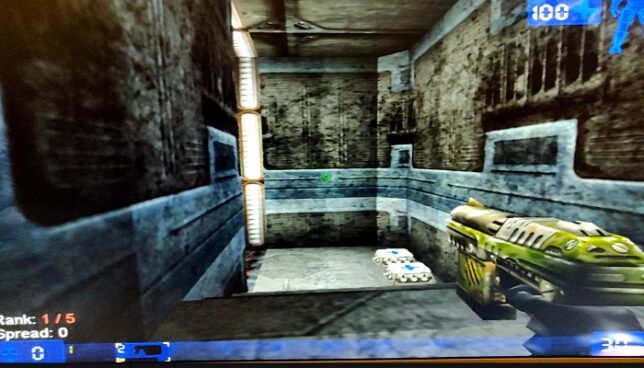

Gaming on the Retro PC Build

After digging out a CD burner and an old image of Windows 98 SE, I’ve got an operating system running and have installed drivers along with a bunch of games.

They play just as I remember. I’ve installed Quake, Quake 2, Unreal Tournament, and a few others to keep me busy for now.

The next step is to replace the temporary modern day LCD monitor I’m using right now. A period correct CRT monitor would really complete the build. I remember Samsung SyncMaster CRTs being excellent. My aim is pick one of these up to complete the system.

If you’re nostalgic like me and want to revisit the PC and games hardware of the late 1990s I highly recommend a build like this.

Follow my ‘Retro’ PC series blog posts: