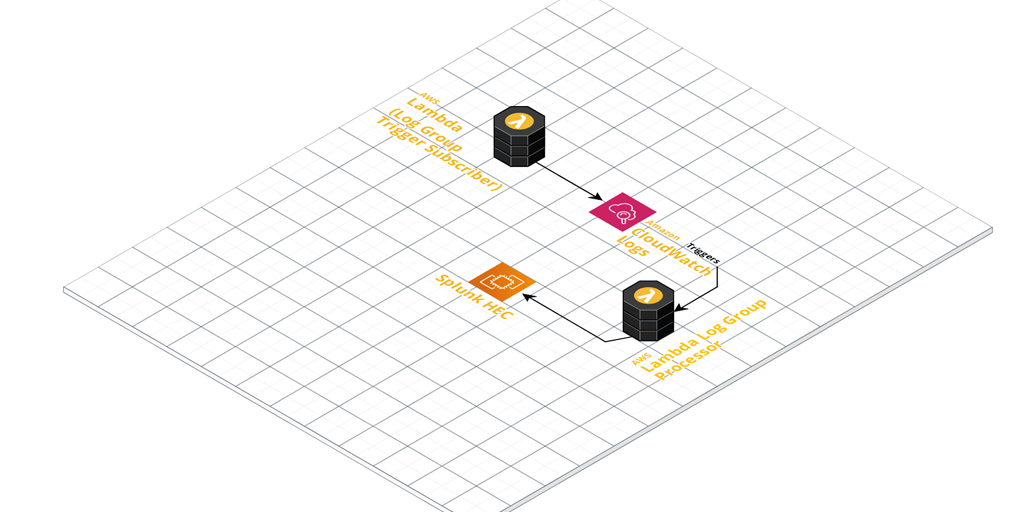

I recently came across a scenario requiring CloudWatch log ingestion to a private Splunk HEC (HTTP Event Collector).

The first and preferred method of ingesting CloudWatch Logs into Splunk is by using AWS Firehose. The problem here though is that Firehose only seems to support an endpoint that is open to the public.

This is a problem if you have a Splunk HEC that is only available inside of a VPC and there is no option to proxy public connections back to it.

The next thing I looked at was the Splunk AWS Lambda function template to ingest CloudWatch logs from Log Group events. I had a quick look and it seems pretty out of date, with synchronous functions and libraries in use.

So, I decided to put together a small AWS Lambda Serverless project to improve on what is currently out there.

You can find the code over on Github.

The new version has:

- async / await, and for promised that wrap the synchronous libraries like zlib.

- A module that handles identification of Log Group names based on a custom regex pattern. If events come from log groups that don’t match the naming convention, then they get rejected. The idea is that you can write another small function that auto-subscribes Log Groups.

- Secrets Manager integration for loading the Splunk HEC token from Secrets Manager. (Or fall back to a simple environment variable if you like).

- Serverless framework wrapper. Pass in your Security Group ID, Subnet IDs and tags, and let serverless CLI deploy the function for you.

- Lambda VPC support by default. You should deploy this Lambda function in a VPC. You could change that, but my idea here is that most enterprises would be running their own internal Splunk inside of their corporate / VPC network. Change it by removing the VPC section in serverless.yml if you do happen to have a public facing Splunk.

You deploy it using Serverless framework, passing in your VPC details and a few other options for customisation.

serverless deploy --stage test \ --iamRole arn:aws:iam::123456789012:role/lambda-vpc-execution-role \ --securityGroupId sg-12345 \ --privateSubnetA subnet-123 \ --privateSubnetB subnet-456 \ --privateSubnetC subnet-789 \ --splunkHecUrl https://your-splunk-hec:8088/services/collector \ --secretManagerItemName your/secretmanager/entry/here

Once configured, it’ll pick up any log events coming in from Log Groups you’ve ‘subscribed’ it to (Lambda CloudWatch Logs Triggers).

These events get enriched with extra metadata defined in the function. The metadata is derived by default from the naming convention used in the CloudWatch Log Groups. Take a close look at the included Regex pattern to ensure you name your Log Groups appropriately. Finally, they’re sent to your Splunk HEC for ingestion.

For an automated Log Group ingestion story, write another small helper function that:

- Looks for Log Groups that are not yet subscribed as CloudWatch Logs Triggers.

- Adds them to your CloudWatch to Splunk HEC function as a trigger and enables it.

In the future I might add this ‘automatic trigger adding function’ to the Github repository, so stay tuned!